This past Wednesday I had the pleasure of attending a virtual zoom workshop centered around citation and source materials. The workshop was lead by Graduate center librarian Jill Cirasella and NYU guest speaker Margaret Smith. Although this workshop isn’t directly correlated with the subject material associated with Digital Humanities, I still felt it was an important one to attend due to the fact that article writing and review is a big component when it comes to graduate work and even though I am only about two months into my journey to the coveted masters degree, I figure it’s better to start learning early about how I can find the best sources to support my work and how I can make sure the said sources are legitimate and accurate.

Cirasella started off the discussion by bringing up this idea of research impact. Qualitative questions like how important is an article? how prominent is a researcher? and how influential is their body of work? were thrown out. To my surprise the answer to these questions was a quantitative one-research metrics, the measure used to quantity the amount of influence a piece of scholarly work has. In other words how many times a publication has been cited in other respective work. Before I go any further I should preface this by saying that this isn’t the definite answer to those questions, just the most convenient one for those who are evaluating the work. Cirasella made it a point to tell us that this isn’t an indicator to show how important or qualified the article is but it is a form of measure that is definitely looked into when concerning a published piece. The first form of research metric that was really keyed in on in the discussion was h-index. H-index is the largest number h for which the author has published h articles that would of been cited h or more times. I know that sounds confusing so here goes my attempt in explaining it- say an author has an h-index of 5, that means the author has 5 articles that have been cited 5 or more times. That doesn’t mean the author has only 5 published articles but it does mean that 5 of their articles have each been included in 5 or more citations. If that still sounds ridiculous to you, trust me you’re not alone. H-index has had it’s fair share of criticism and has a reputation for not taking into consideration certain variables when calculating the number for the index. Variables such as not counting in co-authorship and the fact that it could be easily manipulated by the author (i.e author citing their own work in their other work, getting their author friends to cite their work- very shady).

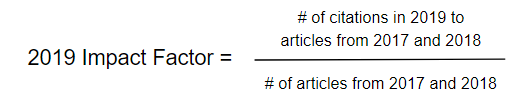

Another form of metric that was brought up was Impact Factor. As opposed to h-index, impact factor is used to measure how good a journal is. By definition impact factor is the number of citations in a given year to a specific journal’s articles for the two years prior, divided by the number of articles from those two prior years. (See image below to get a better understanding)

Impact factor is used when comparing journals in the same discipline and does get tied to a journal’s reputation and researcher’s career. Although much like h-index this metric can be manipulated and also is not an accurate way to discern the quality of an article. An alternative that was provided to me that you guys can also check is SCImago Journal Rank– calculated based on citation data from Scopus with Citations weighted according to “importance” of the citing journal (where “importance” is determined recursively, based on the journals that cite it). On the topic of alternatives I also wanted to mention the last form of metric we looked into- Almetrics. This is another way of judging impact as it goes beyond scholarly citations and looks into links from blogs, social media, news articles, Wikipedia etc. However the downside to this is the obvious favoritism that is given towards the written pieces that involve more buzzworthy popular topics that people are more inclined to read about.

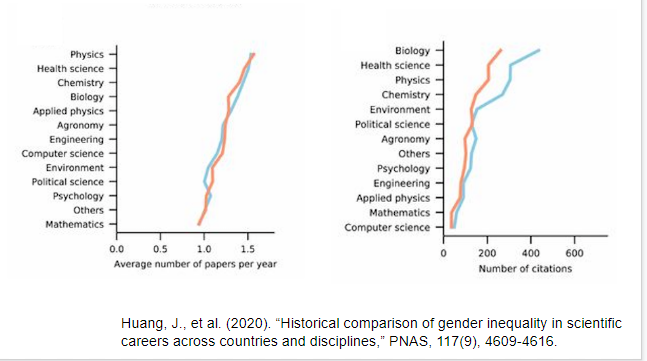

At this point it is clear to see that each of these research metrics has it’s drawbacks which can be a bit annoying. Nevertheless the most infuriating thing that was brought up during the workshop was not the metrics themselves but the fact that all of them are influenced by the gender gap that is abundantly present in the world of citation. Margaret Smith ended the workshop by bringing this matter to the forefront. During her discussion she explained how men are more likely to cite other men in their work even in fields that have majority women authors. Co -authored papers that are mixed gender also have the male author cited more than the female and those with traditionally feminized names are less likely to get their work cited. This implicit bias has been long studied and recognized, below I will provide visual evidence that was provided to us during the presentation.

If you are a fellow female writer here are some tips provided by Smith to help with this issue

- Be consistent in personal/institutional names

- Retain as many rights as possible (librarians can help!)

- Submit work to a repository (CUNY Academic Works).

- Ensure your non-article research products are citable (e.g., put research data into a repository that assigns a DOI).

- Go beyond numbers.

In all I felt this workshop was super insightful and definitely has given me more of a clear perspective into journal and article citing. I’ll be more mindful into looking into the quality of the work rather than the “popularity” it has garnered through use of multiple citations. At the end of the day number’s don’t mean anything if the work isn’t even a accurate projection of it. I will also take it upon myself to try to cite more women in my work to help do my part in bridging the gender gap and I hope you guys will do the same.