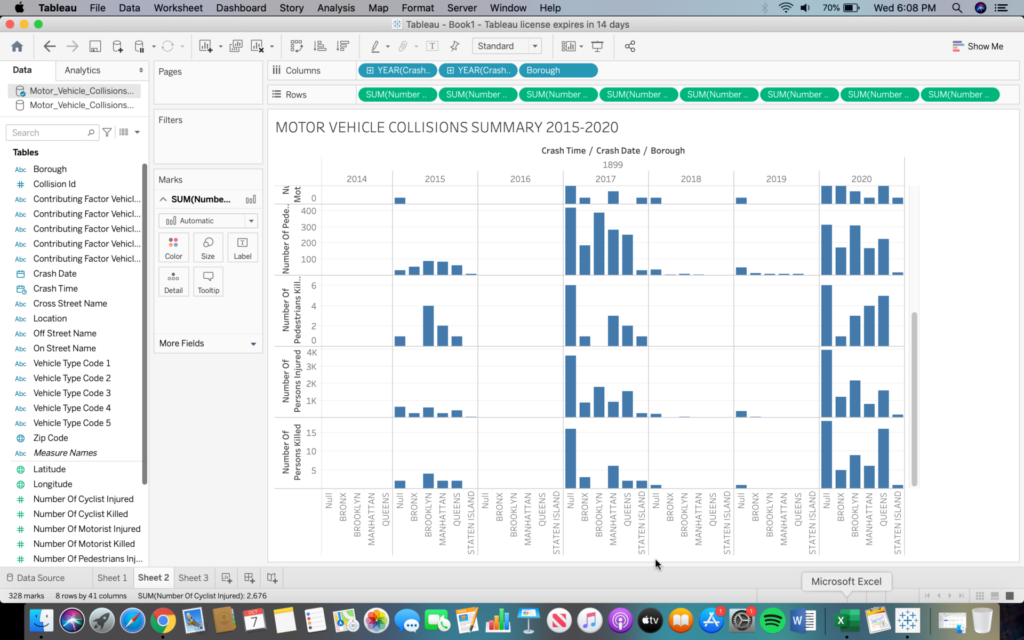

Having just taken part in the HathiTrust digital library workshop I chose to use HathiTrust and their analytical engine to do this text mining assignment. The first step was to find a collection of texts to analyze. Thankfully, HathiTrust has a tab specifically tailored to finding either ready made collections or forming new ones. I chose to use a collection of texts which used the word “detective” in published works prior to 1950. There were only 61 volumes in this particular collection, so it was a good place to start my experiment. It is worth mentioning that should you need to, HathiTrust allows you to make your own collections based on terms you search up. Using this collection I downloaded a TSV file which held all of the volumes in tabs according to the frequency of the word “detective”. HathiTrust’s analytics was located on a different site, where you can upload your TSV file and run algorithms to visualize a topic model of the term used, themes and frequency. Using the InPho Topic Model Explorer I was able to create an interactive topic bubble model. Attached is the link and screenshot of what the map looks like.

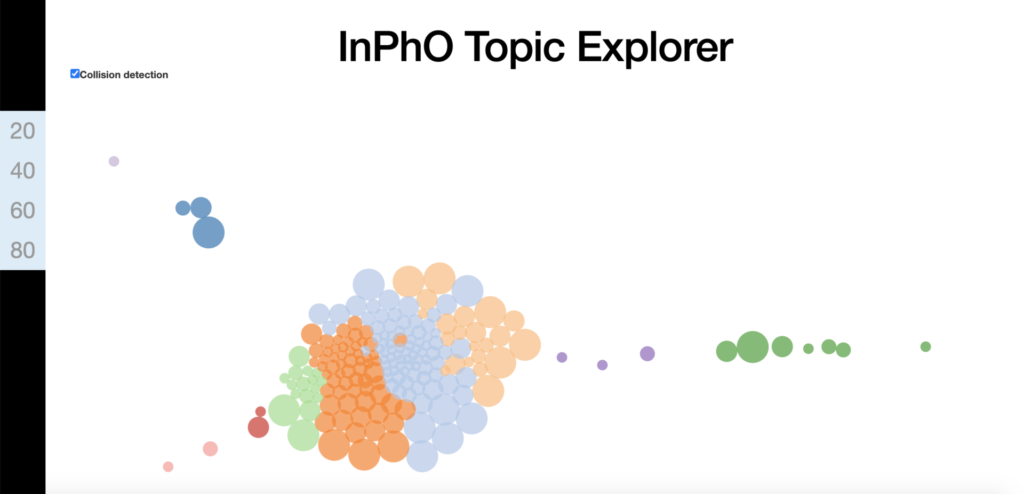

The algorithm groups clusters and color codes them automatically according to groups of topics and similar themes. If you follow the link you will see, for example, that the orange clusters are characterized by fictional stories, and mysteries in which the word “detective” is frequently used. If you use your mouse to hover over each bubble you will see these commonalities mentioned quite often. Also, it is easier to discern similar themes and topics if collision detection is turned off. The above image of the topic model is of the model with collision detection turned on. Below is an image of the topic model with collision detection turned off.

Hovering over the cluster with collision detection turned off makes it easier to see what themes the color coded clusters share.

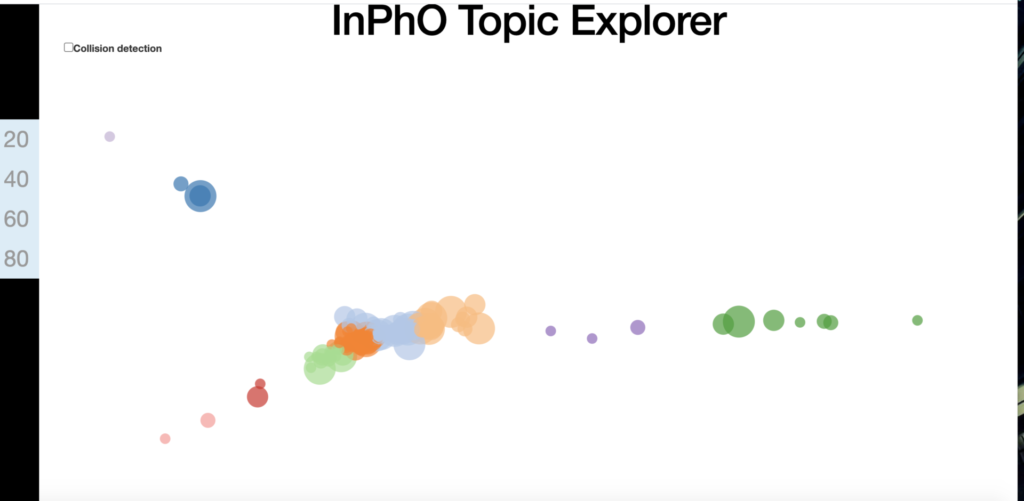

Being curious about the trends of the terms “detective” and “crime” I then utilized HathiTrust’s Bookworm application to further this experiment. The Bookworm search does not use a specific collection, rather it searches HathiTrust’s entire digital library to display a simple double line graph of the usage of terms over time. The end result is a clear visualization of the the trends in term usage over a period of 250 years.

From this graph the most significant take away is the correlation of the uptake in usage of the terms “detective” and “crime” with the rising popularity of detective novels and media from the 1920’s on. Prior to 1920, as is confirmed by the InPho Topic Model, the term “detective” was used in mostly a non-fictional or informational capacity.

A downside to both Bookworm and the InPho Topic Model Explorer is that the texts themselves are not listed. To access the texts used one would either have to record every volume in a collection or look closer into the TSV file itself, as far as I know. Since I attended the workshop finding my way around HathiTrust was not too difficult, however, they are limited by the scope of information available through their digital library. Although partnered with several institutions, including the Grad Center, you may not have much luck mining more obscure terminology. Some terms include those with ethnic connotations or terms not consistent with Western academia, like terms with regional specificity.